If you are using Solaris 11 you have the opportunity to virtualize with zones or using Oracle VM for SPARC. Oracle VM is only available as firmware on compatible SPARC hardware and the hypervisor runs within the firmware; on the other hand Zones allow you to virtualize on Intel or SPARC hardware but the restriction is you can only virtualize the OS that you currently run, sharing the kernel. Zones make a great choice where you need multiple instances of Solaris running on a single host; such as hosting of web servers, small database instances or similar. Zones also use minimal disk space and resource usage.

What’s new with Zones in Oracle Solaris 11

We have had Zones as a form of virtualization in Solaris since Solaris 10, with the release of Solaris 11 Zones take a massive leap forward in the way they use resources, or don’t use, compared with the architecture in Solaris 10. Zones are application containers that is maintained by the running Operating System. The default non-global zone in the Oracle Solaris 11 release is solaris, described in the solaris(5) man page. The solaris non-global zone is supported on all sun4u, sun4v, and x86 architecture machines.

To verify the Oracle Solaris release and the machine architecture, type:

uname -r -m

- -r printing the OS version (5.11)

- -m printing the machine hardware

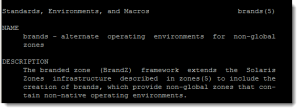

The solaris zone uses the branded zones framework described in the brands(5) man page to run zones installed with the same software as is installed in the global zone.

The system software must always be in sync with the global zone when using a solaris non-global zone. The system software packages within the zone are managed using the new Solaris Image Packaging System (IPS) and solaris non-global zone utilize IPS to ensure software within the non-global zones is kept at the same level as the global zones; effectively reducing the software management across instances of Solaris running within your zones.

Non-global zones use boot environments. Zones are integrated with beadm, the user interface command for managing ZFS Boot Environments (BEs). To view the zone BEs on your system, type the following:

# zoneadm list zbe global test2

The beadm command is supported inside zones for pkg update, just as in the global zone. The beadm command can delete any inactive zones BE associated with the zone.

Zones cheat sheet

The main a commands that we will use to manage zones is zoneadm(/usr/sbin/zoneadm) and zonecfg(/usr/sbin/zonecfg). For more details see the relavent man pages, but the main commands are highlighted here.

Create a zone with an exclusive IP network stack:

# zonecfg -z testzone testzone: No such zone configured Use 'create' to begin configuring a new zone. zonecfg:testzone> create zonecfg:testzone> set zonepath=/zones/testzone zonecfg:testzone> set autoboot=true zonecfg:testzone> verify zonecfg:testzone> commit zonecfg:testzone> exit

List all running zones verbosely:

# zoneadm list -v

List all configured zones:

# zoneadm list -c

List all installed zones:

# zoneadm list -i

Install a zone:

# zoneadm -z testzone install

Boot a zone:

# zoneadm -z testzone boot

List configuration about a zone:

# zoneadm -z testzone list

Login to a zone:

# zlogin -C testzone

Shutdown a zone

# zoneadm -z testzone shutdown

Monitor a zone for CPU, memory and network utilization every 10 seconds:

# zonestat -z testzone 10

Create ZFS Data set for your Zones

The first step that we are likely to do in creating the zone is plan where it will be stored. The space taken is quite minimal compared to a full install of Solaris, the example here takes 1.GB of disk space; however it is still likely that zones will be stored in their own ZFS data set so that their properties and quotas can be managed individually as required.

As root we can create the data set:

# zfs create -o mountpoint=/zones rpool/zones

This will create the rpool/zones ZFS data set but mounted to the /zones directory. If the directory exists it will need to be empty; if it does not exist it will be created. The option for the mount point can be written in two ways:

- -o mountpoint=/zones

- -m /zones

Creating a virtual interface

A virtual network allows you to use virtual network interface cards (VNICs) rather than physical devices directly. Since a physical device can have more than one VNIC configured on top of it, you can create a multi-node network on top of just a few physical devices—possibly even on a single physical, thus building a network within a single system. The ability to have a number of full-featured VNICs configured on top of a single physical device opens the door to building as many virtual servers (zones) as necessary and as many as the system can support, connected by a network and all within a single operating system instance.

In the lab we have just one physical NIC so we will create a VNIC for the zone. This can be created automatically during the configuration of the zone if we specify anet, (automatic network), as the network property. Creating the vnic ahead of time provides a better understanding of the process and more control. Vnics are created with dladm(/usr/sbin/dladm).

# dladm create-vnic -l net0 vnic1

Here we are creating a vnic called vnic1 and it is linked to the physical card net0.

If we wanted to create our own virtual network without connecting to the physical network we can create our own virtual switch and connect vnics to that:

# dladm create-etherstub stub0 # dladm create-vnic -l stub0 vnic1

Creating the zone

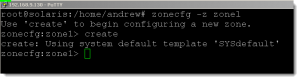

The new zone can be created with the command zonecfg.

# zonecfg -z zone1

Running this command will take into the interactive zonecfg command. If an existing zone with the name supplied, in this case (zone1), is not found we will be prompted to use the command create to instantiate the zone.

zonecfg:zone1> create zonecfg:zone1> set zonepath=/zones/zone1

The, we will create the zone on /zones/zone1 folder, and of course it makes sense to name the folder after the zone. We can set other properties but we will go straight ahead and create the network settings:

zonecfg:zone1> add net zonecfg:zone1:net> set physical=vnic1 zonecfg:zone1:net> end zonecfg:zone1> verify zonecfg:zone1> commit zonecfg:zone1> exit

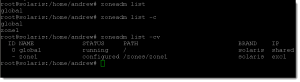

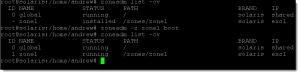

We have the zone configured but not installed. We can verify this with the command zoneadm:

# zoneadm list ##only shows the global zone # zoneadm list -c ## show all zones # zoneadm list -cv ## shows all zones verbosely

Installing the zone

In preparation for installing the zone we will create an XML file that can be read during the install to set the time zone, IP Address etc. This is created with sysconfig(/usr/sbin/sysconfig). The description of sysconfig from the man page show the purpose of the command: The sysconfig utility is the interface for un-configuring and re-configuring a Solaris instance. A Solaris instance is defined as a boot environment in either a global or a non-global zone.

When creating the sysconfig profile we specify the name of the output file we want to create:

# sysconfig create-profile -o /root/zone1-profile.xml

From then on we can run through the wizard using the <F2> to proceed to the next item in the menu.

We are now ready to install the new zone. The installation will work with the configured IPS repository from the global zone that is represented by the running OS on the host; so we need to be online and the repository available. We can now refer to the profile that we just created with the settings for the new zone instance.

# zoneadm -z zone1 install -c /root/zone1-profile.xml

The zone will take a little time to install depending on the speed of the network, repository and local host, but once installed zoneadm list -cv will show the zone as installed.

Booting the zone

The zone is installed but we will need to boot the zone

# zoneadm -z zone1 boot

Again listing the zone with zoneadm list -cv now should show the zone as running, from the following graphic we can see that status as installed, then as the zone is booted we see the new output showing running.

We can connect to the zone with SSH as with any other host, we can also use:

# zlogin -C zone

This will allow us console access to the zone from the Host OS. This will give use a login prompt, we will return to the login prompt when we logout of the zone. To exit the zone console login we use ~. ( tilde followed by the dot ) .

If we check on the host the size og the directory for the zone it should not consume a lot of space, on this system, just 1 GB

![]()

Enable the zone to auto-start

For a zone to auto-start it must have the correct property value set in the zone configuration. We also need the zones service to be running on the host. So first we enable this:

# svcadm enable svc:/system/zones:default

With this set we can then set the zones property:

zonecfg -z zone1 zonecfg:zone1> set autoboot=true zonecfg:zone1> verify zonecfg:zone1> commit zonecfg:zone1> exit

Summary

We now have a running zone configured on our Solaris 11 system. We have seen how we can create virtual network cards and networks within the host and install and manage multiple zones within a single host.